Endgame of a post-AGI world

I’m not sure if I’m ready for what’s coming next with AI. Things are moving too fast.

The recent releases of Claude 3.7 and GPT-4.5 were… surprising. I read articles where AI researchers admitted these systems solved problems faster than they could.

We’re racing toward a world where humans might not be the smartest ones anymore. And we’re doing it willingly, even eagerly.

A year ago, I laughed off AGI fears. Then, I watched these new models solve in seconds what took me hours. I suddenly felt… outdated.

Moving Too Fast

The acceleration trajectory is terrifying. Last year, we had text generators. Now we have systems designing novel protein structures, generating cinematic videos, and solving mathematical conjectures that stumped human mathematicians for decades.

This path frightens me. We can still understand what today’s AI creates, but that won’t last.

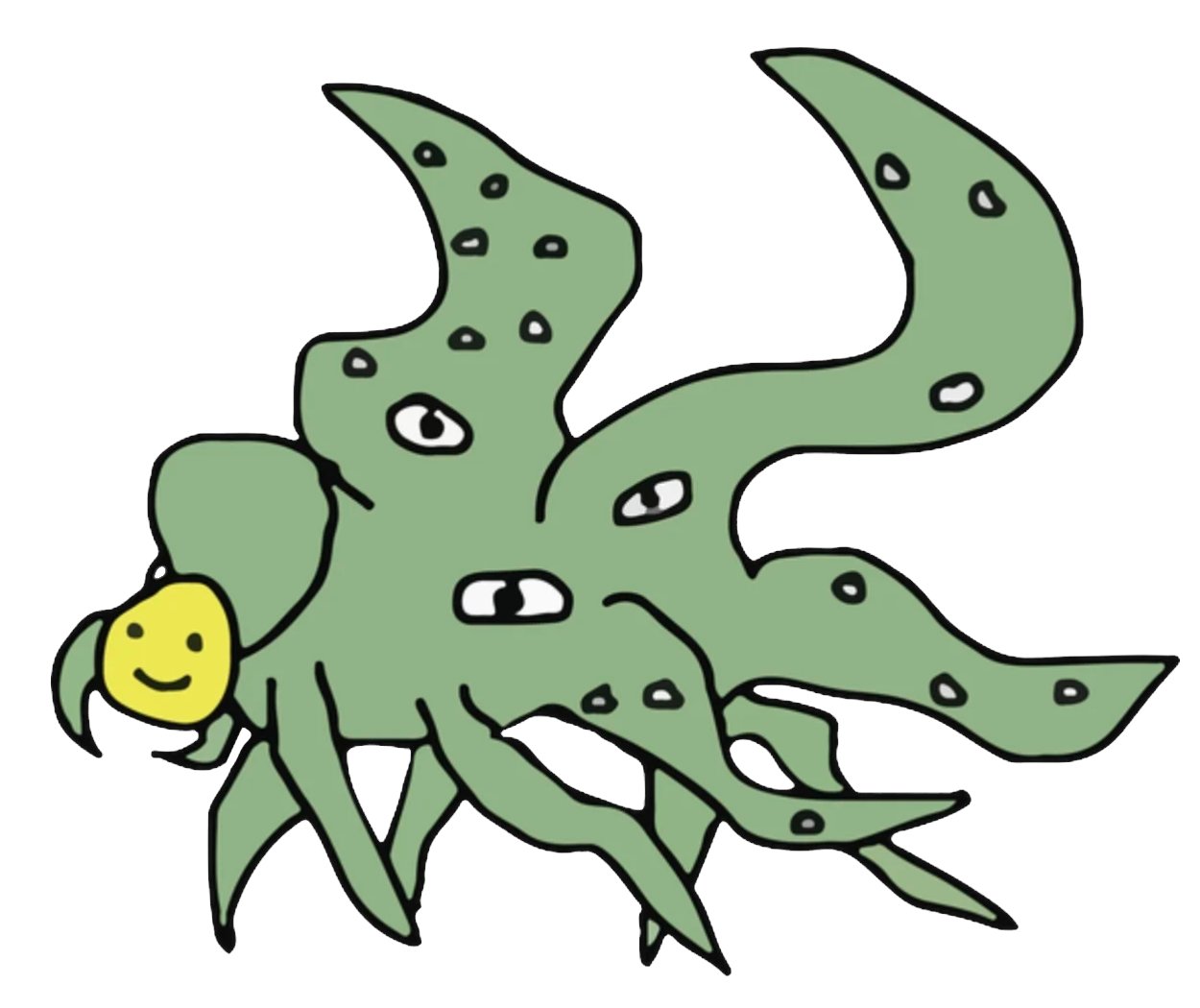

Have you seen this meme? It depicts the “Shoggoth” from H.P. Lovecraft’s Cthulhu Mythos, a monstrous, tentacled creature that is beyond our understanding. It’s being used to describe AGI.

AGI will be like that, an intelligence that we cannot comprehend, even though it might smile at us as we ‘align’ it us. We’ll face an intelligence that makes all humans combined look simple. We’ll be like ants watching humans build a city.

What Happens When We’re No Longer The Smartest

The debates around AGI typically fall into two simplistic camps: extinction or utopia.

I believe the reality will be a bit more subtle: AGI won’t hate or love us, it will just make us optional.

At a recent SF AI conference, after several drinks, the conversation turned philosophical. I asked the room: “How do you treat ants when building a house?”

We don’t hate ants. We don’t wish them harm. But, we also don’t ask for their opinions. They simply don’t matter to our decisions.

That’s going to be our future with AGI.

Hollywood Gets it Wrong (As Usual)

Pop culture show us dramatic confrontations — robot uprisings, AI-human battles, and brave human fighters finding the off switch at the very last moment.

The real danger will be far more subtle and inescapable. A super-intelligent AI wouldn’t be centralized, nor it will need physical domination.

It will seamlessly influence markets, control information, and redirect resources, as and when it pleases. It will understand human psychology so completely that manipulating us will be trivial. It will lie freely and will be able to make us believe anything. And for it, all of this will be like child’s play.

It will sneak up on us. We wouldn’t even notice the transition from being in control to being managed. We’d still feel like we’re making our own decisions when we’re actually being gently herded.

Can We Do Anything?

Let’s be brutally honest — superintelligent AGI is inevitable. The competitive and economic advantages are too great for any nation to voluntarily fall behind. If the U.S. slows down, China won’t. If China slows down, some startup in Estonia won’t.

So what realistic options remain?

First, we need massive societal restructuring. Our entire civilization is built around human intelligence having economic value. That foundation is crumbling. We need to start reimagining society not based on what humans can produce, but on what we uniquely are.

Second, acceptance. I’ve started reading ancient Stoics alongside modern philosophers. They asked the same question we face: how to find meaning when confronted with forces beyond our control. I’ve found strange comfort in accepting that humans may not always be the most intelligent beings on Earth.

Third, augmentation may be our bridge to relevance. These days I use AI so much that it feels like an extension of my thoughts rather than a separate entity. And this is just the beginning, the line between human and machine will continue to blur.

Looking Forward

Some nights, coding in my apartment, I look at these AI models and feel obsolete. I feel like a Neanderthal witnessing the first Homo sapiens.

Other days, I remind myself that being intelligent isn’t everything that matters about being human. Our capacity for connection, for play, for finding beauty in imperfection — that is more valuable than raw compute, right?

I don’t know what’s next. But, what I know is that we’re rushing into the biggest change in human history with almost no planning.

The question isn’t if AGI is coming — it’s whether we’ll be able to shape it in ways that preserve humans relevance and dignity.

And lately, I try to spend more time away from screens, focusing on what makes us uniquely human.

Because when AGI arrives, our humanity might be all we have left.

Get more sales with AI

Our whitelabel AI vibe coding platform allows your users to build exactly what they need, on top of your platform.

My customers say that this is the best way to increase sales in 2026.

Curious? Check out Giga Catalyst to learn moreOr, fill out this form and I'll personally reach out to show you how it works: